In the parts 1-6 of my “Night sky image processing” Series I wrote about the different stages of night sky image processing. In this article I want to put all the pieces together and develop an “automatic star recognizer” which takes an astro-image as input and outputs a list of the recognized stars with their HFD and FWHM values.

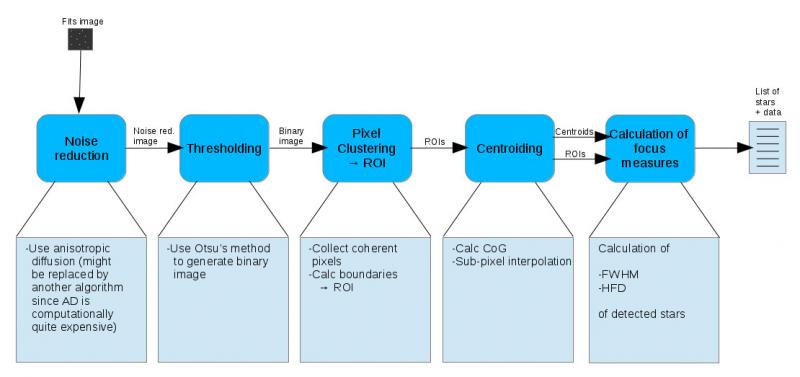

The star recognizer processing pipeline

Basically the processing pipeline is as follows:

ROI = Region of interest

In order to achieve this I use most of the concepts I have looked into earlier:

- Part 1: Noise reduction using anisotropic diffusion

- Part 2: Image binarization using Otsu’s thresholding algorithm

- Part 3: Star clustering

- Part 4: Centroiding with sub-pixel accuracy

- Part 5: Measuring FWHM of a star using curve fitting

- Part 6: Measuring the Half Flux Diameter (HFD) of a star

As input I used this FITS image for testing.

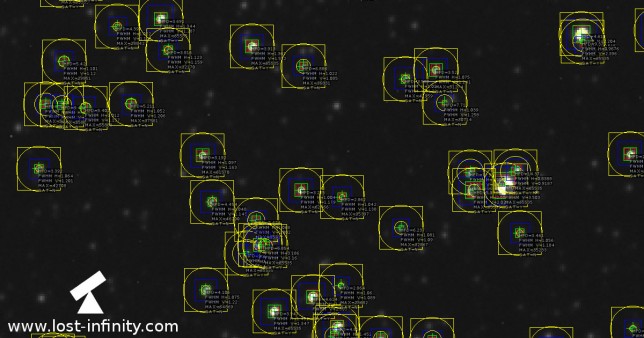

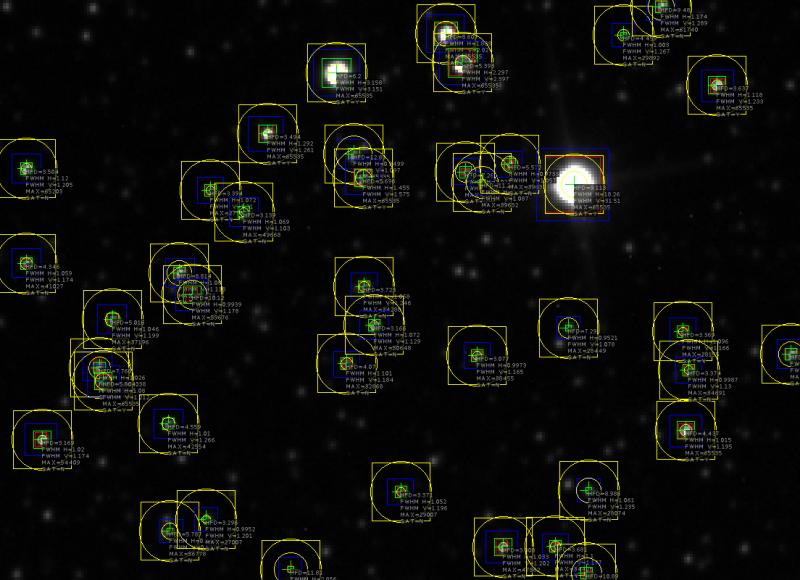

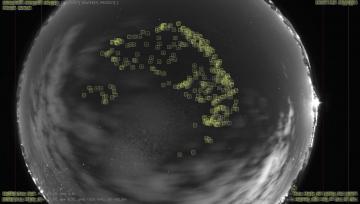

The outcome

The program prints a list with recognized stars (see listing below). Furthermore it creates an output image with all recognized stars. In addition the program marks each star with its most important data (like HFD, FWHM, maximum pixel value, …). Find the result image below. A full resolution version is available here. The legend below explains the meaning of the different markings within the image.

Legend

- Red rectangle – region of interest (ROI) determined based on boundaries of clustered pixels

- Blue rectangle – rectangular region with side length L = max(width, height) of ROI and a border of +3 pixels in each direction (3 turned out to be a good value after doing some tests)

- Green rectangle – rectangle around calculated centroid position (marked with a green cross)

- Yellow rectangle – region used to calculate the HFD

Below is the program output: A list of detected stars with HFD and FWHM values. The porgam creates the corresponding output image “out.jpeg” in the working directory.

$ ./star_recognizer test.fits Opening file test.fits Recognized 41 stars... cogCentroid=( 8.93043, 58.0221), , maxPixValue: 65203.7, sat: 0, hfd: 3.5045, fwhmHorz: 1.12046, fwhmVert: 1.20463 cogCentroid=( 8.98682, 90.6994), , maxPixValue: 41027.9, sat: 0, hfd: 4.34553, fwhmHorz: 1.05919, fwhmVert: 1.17354 cogCentroid=( 14.5982, 151.493), , maxPixValue: 54409.2, sat: 0, hfd: 3.16927, fwhmHorz: 1.01982, fwhmVert: 1.17433 cogCentroid=( 34.2102, 126.658), , maxPixValue: 65535, sat: 1, hfd: 7.76841, fwhmHorz: 1.02607, fwhmVert: 1.03844 cogCentroid=( 35.5147, 131.183), , maxPixValue: 65535, sat: 1, hfd: 5.80448, fwhmHorz: 1.08041, fwhmVert: 1.21222 cogCentroid=( 38.6856, 110.037), , maxPixValue: 37196.2, sat: 0, hfd: 5.01614, fwhmHorz: 1.04594, fwhmVert: 1.1987 cogCentroid=( 58.0112, 146.093), , maxPixValue: 42554.8, sat: 0, hfd: 4.55932, fwhmHorz: 1.00959, fwhmVert: 1.2662 cogCentroid=( 58.4565, 182.915), , maxPixValue: 56778.5, sat: 0, hfd: 5.78698, fwhmHorz: 0.340465, fwhmVert: 0.187672 cogCentroid=( 61.8648, 94.0137), , maxPixValue: 59676.3, sat: 0, hfd: 8.8138, fwhmHorz: 1.08009, fwhmVert: 1.11814 cogCentroid=( 66.2654, 101.394), , maxPixValue: 59676.3, sat: 0, hfd: 10.1156, fwhmHorz: 0.993935, fwhmVert: 1.17848 cogCentroid=( 71.1034, 178.928), , maxPixValue: 27007.6, sat: 0, hfd: 3.29649, fwhmHorz: 0.995213, fwhmVert: 1.20095 cogCentroid=( 72.5221, 65.4851), , maxPixValue: 27780.6, sat: 0, hfd: 3.39423, fwhmHorz: 1.07163, fwhmVert: 1.30951 cogCentroid=( 83.9159, 73.12), , maxPixValue: 49668.5, sat: 0, hfd: 3.13855, fwhmHorz: 1.06936, fwhmVert: 1.10282 cogCentroid=( 92.2189, 45.8812), , maxPixValue: 65535, sat: 1, hfd: 3.49375, fwhmHorz: 1.29158, fwhmVert: 1.26071 cogCentroid=( 100.226, 196.349), , maxPixValue: 30287.7, sat: 0, hfd: 11.8119, fwhmHorz: 2.85621, fwhmVert: 1.45412 cogCentroid=( 116.051, 25.1154), , maxPixValue: 65535, sat: 1, hfd: 6.20024, fwhmHorz: 3.1582, fwhmVert: 3.15077 cogCentroid=( 119.412, 125.329), , maxPixValue: 32868.3, sat: 0, hfd: 4.07041, fwhmHorz: 1.10123, fwhmVert: 1.1844 cogCentroid=( 122.067, 53.0343), , maxPixValue: 65535, sat: 1, hfd: 12.8323, fwhmHorz: 0.949879, fwhmVert: 1.09659 cogCentroid=( 125.16, 61.2535), , maxPixValue: 65535, sat: 1, hfd: 5.69585, fwhmHorz: 1.45516, fwhmVert: 1.57514 cogCentroid=( 125.411, 98.6289), , maxPixValue: 34288.7, sat: 0, hfd: 3.72304, fwhmHorz: 1.06798, fwhmVert: 1.24603 cogCentroid=( 128.932, 112.104), , maxPixValue: 50648.2, sat: 0, hfd: 3.1657, fwhmHorz: 1.07181, fwhmVert: 1.12934 cogCentroid=( 138.286, 169.441), , maxPixValue: 29007.5, sat: 0, hfd: 3.37104, fwhmHorz: 1.05232, fwhmVert: 1.19603 cogCentroid=( 153.714, 11.5674), , maxPixValue: 65535, sat: 1, hfd: 8.6069, fwhmHorz: 1.88784, fwhmVert: 2.02444 cogCentroid=( 159.576, 21.4134), , maxPixValue: 65535, sat: 1, hfd: 5.39341, fwhmHorz: 2.297, fwhmVert: 2.39654 cogCentroid=( 160.564, 59.2009), , maxPixValue: 34556.7, sat: 0, hfd: 7.27967, fwhmHorz: 1.02705, fwhmVert: 1.13518 cogCentroid=( 164.527, 122.593), , maxPixValue: 38455.2, sat: 0, hfd: 3.07726, fwhmHorz: 0.997333, fwhmVert: 1.16452 cogCentroid=( 166.155, 62.7757), , maxPixValue: 39632.9, sat: 0, hfd: 11.2427, fwhmHorz: 0.970297, fwhmVert: 1.08677 cogCentroid=( 173.433, 188.434), , maxPixValue: 47862, sat: 0, hfd: 3.30765, fwhmHorz: 1.03261, fwhmVert: 1.20188 cogCentroid=( 175.75, 56.4406), , maxPixValue: 39632.9, sat: 0, hfd: 5.5724, fwhmHorz: 0.973331, fwhmVert: 1.05672 cogCentroid=( 197.915, 63.4097), , maxPixValue: 65535, sat: 1, hfd: 9.11323, fwhmHorz: 18.2627, fwhmVert: 31.5109 cogCentroid=( 191.482, 188.314), , maxPixValue: 34426.8, sat: 0, hfd: 3.6808, fwhmHorz: 1.0998, fwhmVert: 1.17717 cogCentroid=( 195.951, 112.961), , maxPixValue: 28449.8, sat: 0, hfd: 7.28967, fwhmHorz: 0.952075, fwhmVert: 1.07847 cogCentroid=( 202.338, 197.435), , maxPixValue: 34550.2, sat: 0, hfd: 10.8919, fwhmHorz: 1.0349, fwhmVert: 1.12476 cogCentroid=( 203.002, 169.065), , maxPixValue: 28074.4, sat: 0, hfd: 8.98569, fwhmHorz: 1.06147, fwhmVert: 1.23468 cogCentroid=( 215.011, 11.9268), , maxPixValue: 29892.7, sat: 0, hfd: 4.45885, fwhmHorz: 1.0026, fwhmVert: 1.26654 cogCentroid=( 228.026, 2.22008), , maxPixValue: 61740.2, sat: 0, hfd: 9.48015, fwhmHorz: 1.17352, fwhmVert: 1.28864 cogCentroid=( 235.523, 114.28), , maxPixValue: 28155.2, sat: 0, hfd: 3.36922, fwhmHorz: 1.09564, fwhmVert: 1.16628 cogCentroid=( 236.471, 148.343), , maxPixValue: 65535, sat: 1, hfd: 4.43702, fwhmHorz: 1.01504, fwhmVert: 1.19502 cogCentroid=( 237.539, 127.613), , maxPixValue: 34691.5, sat: 0, hfd: 3.37375, fwhmHorz: 0.998732, fwhmVert: 1.12967 cogCentroid=( 247.325, 29.209), , maxPixValue: 65535, sat: 1, hfd: 3.63678, fwhmHorz: 1.11791, fwhmVert: 1.23304 cogCentroid=( 272.655, 122), , maxPixValue: 58896, sat: 0, hfd: 8.86749, fwhmHorz: 1.0888, fwhmVert: 1.1378

The C++ implementation

NOTE: All the source codes of this “Night sky image processing” series presented in part 1-7 are also available on github in the star_recognizer repository.

Below is the corresponding C++ code. You can also just download it here. In order to compile it, the following dynamic libraries are required (CImg is a pure template “library”):

- libX11.so

- libpthread.so

- libCCfits.so

- libgsl.so

- libgslcblas.so

If the required libraries exist on the system, the following compile command should work without problems:

g++ star_recognizer.cpp -o star_recognizer -lX11 -lpthread -lCCfits -lcfitsio -lgsl -lgslcblas -std=c++0x

Here is the corresponding C++ implementation:

/**

* Star recognizer using the CImg library and CCFits.

*

* Copyright (C) 2015 Carsten Schmitt

*

* This program is free software: you can redistribute it and/or modify

* it under the terms of the GNU General Public License as published by

* the Free Software Foundation, either version 3 of the License, or

* (at your option) any later version.

*

* This program is distributed in the hope that it will be useful,

* but WITHOUT ANY WARRANTY; without even the implied warranty of

* MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

* GNU General Public License for more details.

*

* You should have received a copy of the GNU General Public License

* along with this program. If not, see <https://www.gnu.org/licenses/>.

*/

#include <iostream>

#include <assert.h>

#include <CImg.h>

#include <CCfits/CCfits>

#include <tuple>

#include <functional>

#include <list>

#include <set>

#include <array>

#include <vector>

#include <gsl/gsl_multifit_nlin.h>

using namespace std;

using namespace cimg_library;

using namespace CCfits;

typedef tuple<int /*x*/,int /*y*/> PixelPosT;

typedef set<PixelPosT> PixelPosSetT;

typedef list<PixelPosT> PixelPosListT;

typedef tuple<float, float> PixSubPosT;

typedef tuple<float /*x1*/, float /*y1*/, float /*x2*/, float /*y2*/> FrameT;

struct StarInfoT {

FrameT clusterFrame;

FrameT cogFrame;

FrameT hfdFrame;

PixSubPosT cogCentroid;

PixSubPosT subPixelInterpCentroid;

float hfd;

float fwhmHorz;

float fwhmVert;

float maxPixValue;

bool saturated;

};

typedef list<StarInfoT> StarInfoListT;

/**

* Get all pixels inside a radius: https://stackoverflow.com/questions/14487322/get-all-pixel-array-inside-circle

* Algorithm: https://en.wikipedia.org/wiki/Midpoint_circle_algorithm

*/

bool

insideCircle(float inX /*pos of x*/, float inY /*pos of y*/, float inCenterX, float inCenterY, float inRadius)

{

return (pow(inX - inCenterX, 2.0) + pow(inY - inCenterY, 2.0) <= pow(inRadius, 2.0));

}

void

readFile(CImg<float> & inImg, const string & inFilename, long * outBitPix = 0)

{

std::auto_ptr<FITS> pInfile(new FITS(inFilename, Read, true));

PHDU & image = pInfile->pHDU();

if (outBitPix) {

*outBitPix = image.bitpix();

}

inImg.resize(image.axis(0) /*x*/, image.axis(1) /*y*/, 1/*z*/, 1 /*1 color*/);

// NOTE: At this point we assume that there is only 1 layer.

std::valarray<unsigned long> imgData;

image.read(imgData);

cimg_forXY(inImg, x, y) { inImg(x, inImg.height() - y - 1) = imgData[inImg.offset(x, y)]; }

}

void

thresholdOtsu(const CImg<float> & inImg, long inBitPix, CImg<float> * outBinImg)

{

CImg<> hist = inImg.get_histogram(pow(2.0, inBitPix));

float sum = 0;

cimg_forX(hist, pos) { sum += pos * hist[pos]; }

float numPixels = inImg.width() * inImg.height();

float sumB = 0, wB = 0, max = 0.0;

float threshold1 = 0.0, threshold2 = 0.0;

cimg_forX(hist, i) {

wB += hist[i];

if (! wB) { continue; }

float wF = numPixels - wB;

if (! wF) { break; }

sumB += i * hist[i];

float mF = (sum - sumB) / wF;

float mB = sumB / wB;

float diff = mB - mF;

float bw = wB * wF * pow(diff, 2.0);

if (bw >= max) {

threshold1 = i;

if (bw > max) {

threshold2 = i;

}

max = bw;

}

} // end loop

float th = (threshold1 + threshold2) / 2.0;

*outBinImg = inImg; // Create a copy

outBinImg->threshold(th);

}

/**

* Removes all white neighbours arond pixel from whitePixels

* if they exist and adds them to pixelsToBeProcessed.

*/

void

getAndRemoveNeighbours(PixelPosT inCurPixelPos, PixelPosSetT * inoutWhitePixels, PixelPosListT * inoutPixelsToBeProcessed)

{

const size_t _numPixels = 8, _x = 0, _y = 1;

const int offsets[_numPixels][2] = { { -1, -1 }, { 0, -1 }, { 1, -1 },

{ -1, 0 }, { 1, 0 },

{ -1, 1 }, { 0, 1 }, { 1, 1 } };

for (size_t p = 0; p < _numPixels; ++p) {

PixelPosT curPixPos(std::get<0>(inCurPixelPos) + offsets[p][_x], std::get<1>(inCurPixelPos) + offsets[p][_y]);

PixelPosSetT::iterator itPixPos = inoutWhitePixels->find(curPixPos);

if (itPixPos != inoutWhitePixels->end()) {

const PixelPosT & curPixPos = *itPixPos;

inoutPixelsToBeProcessed->push_back(curPixPos);

inoutWhitePixels->erase(itPixPos); // Remove white pixel from "white set" since it has been now processed

}

}

return;

}

template<typename T> void

clusterStars(const CImg<T> & inImg, StarInfoListT * outStarInfos)

{

PixelPosSetT whitePixels;

cimg_forXY(inImg, x, y) {

if (inImg(x, y)) {

whitePixels.insert(whitePixels.end(), PixelPosT(x, y));

}

}

// Iterate over white pixels as long as set is not empty

while (whitePixels.size()) {

PixelPosListT pixelsToBeProcessed;

PixelPosSetT::iterator itWhitePixPos = whitePixels.begin();

pixelsToBeProcessed.push_back(*itWhitePixPos);

whitePixels.erase(itWhitePixPos);

FrameT frame(inImg.width(), inImg.height(), 0, 0);

while(! pixelsToBeProcessed.empty()) {

PixelPosT curPixelPos = pixelsToBeProcessed.front();

// Determine boundaries (min max in x and y directions)

if (std::get<0>(curPixelPos) /*x*/ < std::get<0>(frame) /*x1*/) { std::get<0>(frame) = std::get<0>(curPixelPos); }

if (std::get<0>(curPixelPos) /*x*/ > std::get<2>(frame) /*x2*/) { std::get<2>(frame) = std::get<0>(curPixelPos); }

if (std::get<1>(curPixelPos) /*y*/ < std::get<1>(frame) /*y1*/) { std::get<1>(frame) = std::get<1>(curPixelPos); }

if (std::get<1>(curPixelPos) /*y*/ > std::get<3>(frame) /*y2*/) { std::get<3>(frame) = std::get<1>(curPixelPos); }

getAndRemoveNeighbours(curPixelPos, & whitePixels, & pixelsToBeProcessed);

pixelsToBeProcessed.pop_front();

}

// Create new star-info and set cluster-frame.

// NOTE: we may use new to avoid copy of StarInfoT...

StarInfoT starInfo;

starInfo.clusterFrame = frame;

outStarInfos->push_back(starInfo);

}

}

float

calcIx2(const CImg<float> & img, int x)

{

float Ix = 0;

cimg_forY(img, y) { Ix += pow(img(x, y), 2.0) * (float) x; }

return Ix;

}

float

calcJy2(const CImg<float> & img, int y)

{

float Iy = 0;

cimg_forX(img, x) { Iy += pow(img(x, y), 2.0) * (float) y; }

return Iy;

}

// Calculate Intensity Weighted Center (IWC)

void

calcIntensityWeightedCenter(const CImg<float> & inImg, float * outX, float * outY)

{

assert(outX && outY);

// Determine weighted centroid - See https://cdn.intechopen.com/pdfs-wm/26716.pdf

float Imean2 = 0, Jmean2 = 0, Ixy2 = 0;

for(size_t i = 0; i < inImg.width(); ++i) {

Imean2 += calcIx2(inImg, i);

cimg_forY(inImg, y) { Ixy2 += pow(inImg(i, y), 2.0); }

}

for(size_t i = 0; i < inImg.height(); ++i) {

Jmean2 += calcJy2(inImg, i);

}

*outX = Imean2 / Ixy2;

*outY = Jmean2 / Ixy2;

}

void

calcSubPixelCenter(const CImg<float> & inImg, float * outX, float * outY, size_t inNumIter = 10 /*num iterations*/)

{

// Sub pixel interpolation

float c, a1, a2, a3, a4, b1, b2, b3, b4;

float a1n, a2n, a3n, a4n, b1n, b2n, b3n, b4n;

assert(inImg.width() == 3 && inImg.height() == 3);

b1 = inImg(0, 0); a2 = inImg(1, 0); b2 = inImg(2, 0);

a1 = inImg(0, 1); c = inImg(1, 1); a3 = inImg(2, 1);

b4 = inImg(0, 2); a4 = inImg(1, 2); b3 = inImg(2, 2);

for (size_t i = 0; i < inNumIter; ++i) {

float c2 = 2 * c;

float sp1 = (a1 + a2 + c2) / 4;

float sp2 = (a2 + a3 + c2) / 4;

float sp3 = (a3 + a4 + c2) / 4;

float sp4 = (a4 + a1 + c2) / 4;

// New maximum is center

float newC = std::max({ sp1, sp2, sp3, sp4 });

// Calc position of new center

float ad = pow(2.0, -((float) i + 1));

if (newC == sp1) {

*outX = *outX - ad; // to the left

*outY = *outY - ad; // to the top

// Calculate new sub pixel values

b1n = (a1 + a2 + 2 * b1) / 4;

b2n = (c + b2 + 2 * a2) / 4;

b3n = sp3;

b4n = (b4 + c + 2 * a1) / 4;

a1n = (b1n + c + 2 * a1) / 4;

a2n = (b1n + c + 2 * a2) / 4;

a3n = sp2;

a4n = sp4;

} else if (newC == sp2) {

*outX = *outX + ad; // to the right

*outY = *outY - ad; // to the top

// Calculate new sub pixel values

b1n = (2 * a2 + b1 + c) / 4;

b2n = (2 * b2 + a3 + a2) / 4;

b3n = (2 * a3 + b3 + c) / 4;

b4n = sp4;

a1n = sp1;

a2n = (b2n + c + 2 * a2) / 4;

a3n = (b2n + c + 2 * a3) / 4;

a4n = sp3;

} else if (newC == sp3) {

*outX = *outX + ad; // to the right

*outY = *outY + ad; // to the bottom

// Calculate new sub pixel values

b1n = sp1;

b2n = (b2 + 2 * a3 + c) / 4;

b3n = (2 * b3 + a3 + a4) / 4;

b4n = (2 * a4 + b4 + c) / 4;

a1n = sp4;

a2n = sp2;

a3n = (b3n + 2 * a3 + c) / 4;

a4n = (b3n + 2 * a4 + c) / 4;

} else {

*outX = *outX - ad; // to the left

*outY = *outY + ad; // to the bottom

// Calculate new sub pixel values

b1n = (2 * a1 + b1 + c) / 4;

b2n = sp2;

b3n = (c + b3 + 2 * a4) / 4;

b4n = (2 * b4 + a1 + a4) / 4;

a1n = (b4n + 2 * a1 + c) / 4;

a2n = sp1;

a3n = sp3;

a4n = (b4n + 2 * a4 + c) / 4;

}

c = newC; // Oi = Oi+1

a1 = a1n;

a2 = a2n;

a3 = a3n;

a4 = a4n;

b1 = b1n;

b2 = b2n;

b3 = b3n;

b4 = b4n;

}

}

void

calcCentroid(const CImg<float> & inImg, const FrameT & inFrame, PixSubPosT * outPixelPos, PixSubPosT * outSubPixelPos = 0, size_t inNumIterations = 10)

{

// Get frame sub img

CImg<float> subImg = inImg.get_crop(std::get<0>(inFrame), std::get<1>(inFrame), std::get<2>(inFrame), std::get<3>(inFrame));

float & xc = std::get<0>(*outPixelPos);

float & yc = std::get<1>(*outPixelPos);

// 1. Calculate the IWC

calcIntensityWeightedCenter(subImg, & xc, & yc);

if (outSubPixelPos) {

// 2. Round to nearest integer and then iteratively improve.

int xi = floor(xc + 0.5);

int yi = floor(yc + 0.5);

CImg<float> img3x3 = inImg.get_crop(xi - 1 /*x0*/, yi - 1 /*y0*/, xi + 1 /*x1*/, yi + 1 /*y1*/);

// 3. Interpolate using sub-pixel algorithm

float xsc = xi, ysc = yi;

calcSubPixelCenter(img3x3, & xsc, & ysc, inNumIterations);

std::get<0>(*outSubPixelPos) = xsc;

std::get<1>(*outSubPixelPos) = ysc;

}

}

/**

* Expects star centered in the middle of the image (in x and y) and mean background subtracted from image.

*

* HDF calculation: https://www005.upp.so-net.ne.jp/k_miyash/occ02/halffluxdiameter/halffluxdiameter_en.html

* https://www.cyanogen.com/help/maximdl/Half-Flux.htm

*

* NOTE: Currently the accuracy is limited by the insideCircle function (-> sub-pixel accuracy).

* NOTE: The HFD is estimated in case there is no flux (HFD ~ sqrt(2) * inOuterDiameter / 2).

* NOTE: The outer diameter is usually a value which depends on the properties of the optical

* system and also on the seeing conditions. The HFD value calculated depends on this

* outer diameter value.

*/

float

calcHfd(const CImg<float> & inImage, unsigned int inOuterDiameter)

{

// Sum up all pixel values in whole circle

float outerRadius = inOuterDiameter / 2;

float sum = 0, sumDist = 0;

int centerX = ceil(inImage.width() / 2.0);

int centerY = ceil(inImage.height() / 2.0);

cimg_forXY(inImage, x, y) {

if (insideCircle(x, y, centerX, centerY, outerRadius)) {

sum += inImage(x, y);

sumDist += inImage(x, y) * sqrt(pow((float) x - (float) centerX, 2.0f) + pow((float) y - (float) centerY, 2.0f));

}

}

// NOTE: Multiplying with 2 is required since actually just the HFR is calculated above

return (sum ? 2.0 * sumDist / sum : sqrt(2.0) * outerRadius);

}

/**********************************************************************

* Helper classes

**********************************************************************/

struct DataPointT {

float x;

float y;

DataPointT(float inX = 0, float inY = 0) : x(inX), y(inY) {}

};

typedef vector<DataPointT> DataPointsT;

struct GslMultiFitDataT {

float y;

float sigma;

DataPointT pt;

};

typedef vector<GslMultiFitDataT> GslMultiFitParmsT;

/**********************************************************************

* Curve to fit to is supplied by traits.

**********************************************************************/

template <class FitTraitsT>

class CurveFitTmplT {

public:

typedef typename FitTraitsT::CurveParamsT CurveParamsT;

/**

* DataAccessor allows specifying how x,y data is accessed.

* See https://en.wikipedia.org/wiki/Approximation_error for expl. of rel and abs errors.

*/

template<typename DataAccessorT> static int

fitGslLevenbergMarquart(const typename DataAccessorT::TypeT & inData, typename CurveParamsT::TypeT * outResults,

double inEpsAbs, double inEpsRel, size_t inNumMaxIter = 500) {

GslMultiFitParmsT gslMultiFitParms(inData.size());

// Fill in the parameters

for (typename DataAccessorT::TypeT::const_iterator it = inData.begin(); it != inData.end(); ++it) {

size_t idx = std::distance(inData.begin(), it);

const DataPointT & dataPoint = DataAccessorT::getDataPoint(idx, it);

gslMultiFitParms[idx].y = dataPoint.y;

gslMultiFitParms[idx].sigma = 0.1f;

gslMultiFitParms[idx].pt = dataPoint;

}

// Fill in function info

gsl_multifit_function_fdf f;

f.f = FitTraitsT::gslFx;

f.df = FitTraitsT::gslDfx;

f.fdf = FitTraitsT::gslFdfx;

f.n = inData.size();

f.p = FitTraitsT::CurveParamsT::_Count;

f.params = & gslMultiFitParms;

gsl_vector * guess = gsl_vector_alloc(FitTraitsT::CurveParamsT::_Count); // Allocate the guess vector

FitTraitsT::makeGuess(gslMultiFitParms, guess); // Make initial guesses based on the data

// Create a Levenberg-Marquardt solver with n data points and m parameters

gsl_multifit_fdfsolver * solver = gsl_multifit_fdfsolver_alloc(gsl_multifit_fdfsolver_lmsder,

inData.size(), FitTraitsT::CurveParamsT::_Count);

gsl_multifit_fdfsolver_set(solver, & f, guess); // Initialize the solver

int status, i = 0;

// Iterate to to find a result

do {

i++;

status = gsl_multifit_fdfsolver_iterate(solver); // returns 0 in case of success

if (status) { break; }

status = gsl_multifit_test_delta(solver->dx, solver->x, inEpsAbs, inEpsRel);

} while (status == GSL_CONTINUE && i < inNumMaxIter);

// Store the results to be returned to the user (copy from gsl_vector to result structure)

for (size_t i = 0; i < FitTraitsT::CurveParamsT::_Count; ++i) {

typename FitTraitsT::CurveParamsT::TypeE idx = static_cast<typename FitTraitsT::CurveParamsT::TypeE>(i);

(*outResults)[idx] = gsl_vector_get(solver->x, idx);

}

// Free GSL memory

gsl_multifit_fdfsolver_free(solver);

gsl_vector_free(guess);

return status;

}

};

/**********************************************************************

* Gaussian fit traits

**********************************************************************/

class GaussianFitTraitsT {

private:

public:

struct CurveParamsT {

// b = base, p = peak, c = center in x, w = mean width (FWHM)

enum TypeE { B_IDX = 0, P_IDX, C_IDX, W_IDX, _Count };

struct TypeT : public std::array<float, TypeE::_Count> {

TypeT(const gsl_vector * inVec = 0) {

for (size_t i = 0; i < TypeE::_Count; ++i) {

TypeE idx = static_cast<TypeE>(i);

(*this)[i] = (inVec ? gsl_vector_get(inVec, idx) : 0);

}

}

};

};

/* Makes a guess for b, p, c and w based on the supplied data */

static void makeGuess(const GslMultiFitParmsT & inData, gsl_vector * guess) {

size_t numDataPoints = inData.size();

float y_mean = 0;

float y_max = inData.at(0).pt.y;

float c = inData.at(0).pt.x;

for(size_t i = 0; i < numDataPoints; ++i) {

const DataPointT & dataPoint = inData.at(i).pt;

y_mean += dataPoint.y;

if(y_max < dataPoint.y) {

y_max = dataPoint.y;

c = dataPoint.x;

}

}

y_mean /= (float) numDataPoints;

float w = (inData.at(numDataPoints - 1).pt.x - inData.at(0).pt.x) / 10.0;

gsl_vector_set(guess, CurveParamsT::B_IDX, y_mean);

gsl_vector_set(guess, CurveParamsT::P_IDX, y_max);

gsl_vector_set(guess, CurveParamsT::C_IDX, c);

gsl_vector_set(guess, CurveParamsT::W_IDX, w);

}

/* y = b + p * exp(-0.5f * ((t - c) / w) * ((t - c) / w)) */

static float fx(float x, const CurveParamsT::TypeT & inParms) {

float b = inParms[CurveParamsT::B_IDX];

float p = inParms[CurveParamsT::P_IDX];

float c = inParms[CurveParamsT::C_IDX];

float w = inParms[CurveParamsT::W_IDX];

float t = ((x - c) / w);

t *= t;

return (b + p * exp(-0.5f * t));

}

/* Calculates f(x) = b + p * e^[0.5*((x-c)/w)] for each data point. */

static int gslFx(const gsl_vector * x, void * inGslParams, gsl_vector * outResultVec) {

CurveParamsT::TypeT curveParams(x); // Store the current coefficient values

const GslMultiFitParmsT * gslParams = ((GslMultiFitParmsT*) inGslParams); // Store parameter values

//Execute Levenberg-Marquart on f(x)

for(size_t i = 0; i < gslParams->size(); ++i) {

const GslMultiFitDataT & gslData = gslParams->at(i);

float yi = GaussianFitTraitsT::fx((float) gslData.pt.x, curveParams);

gsl_vector_set(outResultVec, i, (yi - gslData.y) / gslData.sigma);

}

return GSL_SUCCESS;

}

/* Calculates the Jacobian (derivative) matrix of f(x) = b + p * e^[0.5*((x-c)/w)^2] for each data point */

static int gslDfx(const gsl_vector * x, void * params, gsl_matrix * J) {

// Store parameter values

const GslMultiFitParmsT * gslParams = ((GslMultiFitParmsT*) params);

// Store current coefficients

float p = gsl_vector_get(x, CurveParamsT::P_IDX);

float c = gsl_vector_get(x, CurveParamsT::C_IDX);

float w = gsl_vector_get(x, CurveParamsT::W_IDX);

// Store non-changing calculations

float w2 = w * w;

float w3 = w2 * w;

for(size_t i = 0; i < gslParams->size(); ++i) {

const GslMultiFitDataT & gslData = gslParams->at(i);

float x_minus_c = (gslData.pt.x - c);

float e = exp(-0.5f * (x_minus_c / w) * (x_minus_c / w));

gsl_matrix_set(J, i, CurveParamsT::B_IDX, 1 / gslData.sigma);

gsl_matrix_set(J, i, CurveParamsT::P_IDX, e / gslData.sigma);

gsl_matrix_set(J, i, CurveParamsT::C_IDX, (p * e * x_minus_c) / (gslData.sigma * w2));

gsl_matrix_set(J, i, CurveParamsT::W_IDX, (p * e * x_minus_c * x_minus_c) / (gslData.sigma * w3));

}

return GSL_SUCCESS;

}

/* Invokes f(x) and f'(x) */

static int gslFdfx(const gsl_vector * x, void * params, gsl_vector * f, gsl_matrix * J) {

gslFx(x, params, f);

gslDfx(x, params, J);

return GSL_SUCCESS;

}

};

typedef list<PixSubPosT> MyDataContainerT;

class MyDataAccessorT {

public:

typedef MyDataContainerT TypeT;

static DataPointT getDataPoint(size_t inIdx, TypeT::const_iterator inIt) {

const PixSubPosT & pos = *inIt;

DataPointT dp(get<0>(pos) /*inIdx*/, get<1>(pos) /*y*/);

return dp;

}

};

FrameT

rectify(const FrameT & inFrame)

{

float border = 3;

float border2 = 2.0 * border;

float width = fabs(std::get<0>(inFrame) - std::get<2>(inFrame)) + border2;

float height = fabs(std::get<1>(inFrame) - std::get<3>(inFrame)) + border2;

float L = max(width, height);

float x0 = std::get<0>(inFrame) - (fabs(width - L) / 2.0) - border;

float y0 = std::get<1>(inFrame) - (fabs(height - L) / 2.0) - border;

return FrameT(x0, y0, x0 + L, y0 + L);

}

int

main(int argc, char *argv[])

{

/* outerHfdDiameter depends on pixel size and focal length (and seeing...).

Later we may calculate it automatically wihth goven focal length and pixel

size of the camera. For now it is a "best guess" value.

*/

const unsigned int outerHfdDiameter = 21;

StarInfoListT starInfos;

vector < list<StarInfoT *> > starBuckets;

CImg<float> img;

long bitPix = 0;

// Read file to CImg

try {

cerr << "Opening file " << argv[1] << endl;

readFile(img, argv[1], & bitPix);

} catch (FitsException &) {

cerr << "Read FITS failed." << endl;

return 1;

}

// Create RGB image from fits file to paint boundaries and centroids (just for visualization)

CImg<unsigned char> rgbImg(img.width(), img.height(), 1 /*depth*/, 3 /*3 channels - RGB*/);

float min = img.min(), mm = img.max() - min;

cimg_forXY(img, x, y) {

int value = 255.0 * (img(x,y) - min) / mm;

rgbImg(x, y, 0 /*red*/) = value;

rgbImg(x, y, 1 /*green*/) = value;

rgbImg(x, y, 2 /*blue*/) = value;

}

// AD noise reduction --> In: Loaded image, Out: Noise reduced image

// NOTE: This step takes a while for big images... too long for usage in a loop ->

// Should only be used on image segments, later...

//

// https://cimg.sourceforge.net/reference/structcimg__library_1_1CImg.html

CImg<float> & aiImg = img.blur_anisotropic(30.0f, /*amplitude*/

0.7f, /*sharpness*/

0.3f, /*anisotropy*/

0.6f, /*alpha*/

1.1f, /*sigma*/

0.8f, /*dl*/

30, /*da*/

2, /*gauss_prec*/

0, /*interpolation_type*/

false /*fast_approx*/

);

// Thresholding (Otsu) --> In: Noise reduced image, Out: binary image

CImg<float> binImg;

thresholdOtsu(aiImg, bitPix, & binImg);

// Clustering --> In: binary image from thresholding, Out: List of detected stars, subimg-boundaries (x1,y1,x2,y2) for each star

clusterStars(binImg, & starInfos);

cerr << "Recognized " << starInfos.size() << " stars..." << endl;

// Calc brightness boundaries for possible focusing stars

float maxPossiblePixValue = pow(2.0, bitPix) - 1;

// For each star

for (StarInfoListT::iterator it = starInfos.begin(); it != starInfos.end(); ++it) {

const FrameT & frame = it->clusterFrame;

FrameT & cogFrame = it->cogFrame;

FrameT & hfdFrame = it->hfdFrame;

PixSubPosT & cogCentroid = it->cogCentroid;

PixSubPosT & subPixelInterpCentroid = it->subPixelInterpCentroid;

float & hfd = it->hfd;

float & fwhmHorz = it->fwhmHorz;

float & fwhmVert = it->fwhmVert;

float & maxPixValue = it->maxPixValue;

bool & saturated = it->saturated;

FrameT squareFrame = rectify(frame);

// Centroid calculation --> In: Handle to full noise reduced image, subimg-boundaries (x1,y1,x2,y2), Out: (x,y) - abs. centroid coordinates

calcCentroid(aiImg, squareFrame, & cogCentroid, & subPixelInterpCentroid, 10 /* num iterations */);

std::get<0>(cogCentroid) += std::get<0>(squareFrame);

std::get<1>(cogCentroid) += std::get<1>(squareFrame);

std::get<0>(subPixelInterpCentroid) += std::get<0>(squareFrame);

std::get<1>(subPixelInterpCentroid) += std::get<1>(squareFrame);

// Calculate cog boundaries

float maxClusterEdge = std::max(fabs(std::get<0>(frame) - std::get<2>(frame)), fabs(std::get<1>(frame) - std::get<3>(frame)));

float cogHalfEdge = ceil(maxClusterEdge / 2.0);

float cogX = std::get<0>(cogCentroid);

float cogY = std::get<1>(cogCentroid);

std::get<0>(cogFrame) = cogX - cogHalfEdge - 1;

std::get<1>(cogFrame) = cogY - cogHalfEdge - 1;

std::get<2>(cogFrame) = cogX + cogHalfEdge + 1;

std::get<3>(cogFrame) = cogY + cogHalfEdge + 1;

// HFD calculation --> In: image, Out: HFD value

// Subtract mean value from image which is required for HFD calculation

size_t hfdRectDist = floor(outerHfdDiameter / 2.0);

std::get<0>(hfdFrame) = cogX - hfdRectDist;

std::get<1>(hfdFrame) = cogY - hfdRectDist;

std::get<2>(hfdFrame) = cogX + hfdRectDist;

std::get<3>(hfdFrame) = cogY + hfdRectDist;

CImg<float> hfdSubImg = aiImg.get_crop(std::get<0>(hfdFrame), std::get<1>(hfdFrame), std::get<2>(hfdFrame), std::get<3>(hfdFrame));

maxPixValue = hfdSubImg.max();

//saturated = (maxPixValue > lowerBound && maxPixValue < upperBound);

saturated = (maxPixValue == maxPossiblePixValue);

CImg<float> imgHfdSubMean(hfdSubImg);

double mean = hfdSubImg.mean();

cimg_forXY(hfdSubImg, x, y) {

imgHfdSubMean(x, y) = (hfdSubImg(x, y) < mean ? 0 : hfdSubImg(x, y) - mean);

}

// Calc the HFD

hfd = calcHfd(imgHfdSubMean, outerHfdDiameter /*outer diameter in px*/);

// FWHM calculation --> In: Handle to full noise reduced image, abs. centroid coordinates, Out: FWHM value

MyDataContainerT vertDataPoints, horzDataPoints;

cimg_forX(imgHfdSubMean, x) {

horzDataPoints.push_back(make_pair(x, imgHfdSubMean(x, floor(imgHfdSubMean.height() / 2.0 + 0.5))));

}

cimg_forY(imgHfdSubMean, y) {

vertDataPoints.push_back(make_pair(y, imgHfdSubMean(floor(imgHfdSubMean.width() / 2.0 + 0.5), y)));

}

// Do the LM fit

typedef CurveFitTmplT<GaussianFitTraitsT> GaussMatcherT;

typedef GaussMatcherT::CurveParamsT CurveParamsT;

CurveParamsT::TypeT gaussCurveParmsHorz, gaussCurveParmsVert;

GaussMatcherT::fitGslLevenbergMarquart<MyDataAccessorT>(horzDataPoints, & gaussCurveParmsHorz, 0.1f /*EpsAbs*/, 0.1f /*EpsRel*/);

fwhmHorz = gaussCurveParmsHorz[CurveParamsT::W_IDX];

GaussMatcherT::fitGslLevenbergMarquart<MyDataAccessorT>(vertDataPoints, & gaussCurveParmsVert, 0.1f /*EpsAbs*/, 0.1f /*EpsRel*/);

fwhmVert = gaussCurveParmsVert[CurveParamsT::W_IDX];

}

// Create result image

const int factor = 4;

CImg<unsigned char> & rgbResized = rgbImg.resize(factor * rgbImg.width(), factor * rgbImg.height(),

-100 /*size_z*/, -100 /*size_c*/, 1 /*interpolation_type*/);

// Draw cluster boundaries and square cluster boundaries

const unsigned char red[3] = { 255, 0, 0 }, green[3] = { 0, 255, 0 }, yellow[3] = { 255, 255, 0 };

const unsigned char black[3] = { 0, 0, 0 }, blue[3] = { 0, 0, 255 }, white[3] = { 255, 255, 255 };

const size_t cCrossSize = 3;

// Mark all stars in RGB image

for (StarInfoListT::iterator it = starInfos.begin(); it != starInfos.end(); ++it) {

StarInfoT * curStarInfo = & (*it);

PixSubPosT & cogCentroid = curStarInfo->cogCentroid;

float & hfd = curStarInfo->hfd;

float & fwhmHorz = curStarInfo->fwhmHorz;

float & fwhmVert = curStarInfo->fwhmVert;

float & maxPixValue = curStarInfo->maxPixValue;

cerr << "cogCentroid=(" << setw(9) << std::get<0>(curStarInfo->cogCentroid)

<< ", " << setw(9) << std::get<1>(curStarInfo->cogCentroid)

<< "), " << setw(8) << ", maxPixValue: " << setw(8) << maxPixValue

<< ", sat: " << curStarInfo->saturated << ", hfd: " << setw(10) << hfd

<< ", fwhmHorz: " << setw(10) << fwhmHorz << ", fwhmVert: " << setw(10) << fwhmVert << endl;

const FrameT & frame = curStarInfo->clusterFrame;

FrameT squareFrame(rectify(frame));

rgbResized.draw_rectangle(floor(factor * (std::get<0>(frame) - 1) + 0.5), floor(factor * (std::get<1>(frame) - 1) + 0.5),

floor(factor * (std::get<2>(frame) + 1) + 0.5), floor(factor * (std::get<3>(frame) + 1) + 0.5),

red, 1 /*opacity*/, ~0 /*pattern*/);

rgbResized.draw_rectangle(floor(factor * (std::get<0>(squareFrame) - 1) + 0.5), floor(factor * (std::get<1>(squareFrame) - 1) + 0.5),

floor(factor * (std::get<2>(squareFrame) + 1) + 0.5), floor(factor * (std::get<3>(squareFrame) + 1) + 0.5),

blue, 1 /*opacity*/, ~0 /*pattern*/);

// Draw centroid crosses and centroid boundaries

const PixSubPosT & subPos = curStarInfo->cogCentroid;

const FrameT & cogFrame = curStarInfo->cogFrame;

const FrameT & hfdFrame = curStarInfo->hfdFrame;

rgbResized.draw_line(floor(factor * (std::get<0>(subPos) - cCrossSize) + 0.5), floor(factor * std::get<1>(subPos) + 0.5),

floor(factor * (std::get<0>(subPos) + cCrossSize) + 0.5), floor(factor * std::get<1>(subPos) + 0.5), green, 1 /*opacity*/);

rgbResized.draw_line(floor(factor * std::get<0>(subPos) + 0.5), floor(factor * (std::get<1>(subPos) - cCrossSize) + 0.5),

floor(factor * std::get<0>(subPos) + 0.5), floor(factor * (std::get<1>(subPos) + cCrossSize) + 0.5), green, 1 /*opacity*/);

rgbResized.draw_rectangle(floor(factor * std::get<0>(cogFrame) + 0.5), floor(factor * std::get<1>(cogFrame) + 0.5),

floor(factor * std::get<2>(cogFrame) + 0.5), floor(factor * std::get<3>(cogFrame) + 0.5),

green, 1 /*opacity*/, ~0 /*pattern*/);

// Draw HFD

rgbResized.draw_rectangle(floor(factor * std::get<0>(hfdFrame) + 0.5), floor(factor * std::get<1>(hfdFrame) + 0.5),

floor(factor * std::get<2>(hfdFrame) + 0.5), floor(factor * std::get<3>(hfdFrame) + 0.5),

yellow, 1 /*opacity*/, ~0 /*pattern*/);

rgbImg.draw_circle(floor(factor * std::get<0>(subPos) + 0.5), floor(factor * std::get<1>(subPos) + 0.5), factor * outerHfdDiameter / 2, yellow, 1 /*pattern*/, 1 /*opacity*/);

rgbImg.draw_circle(floor(factor * std::get<0>(subPos) + 0.5), floor(factor * std::get<1>(subPos) + 0.5), factor * hfd / 2, yellow, 1 /*pattern*/, 1 /*opacity*/);

// Draw text

const bool & saturated = curStarInfo->saturated;

ostringstream oss;

oss.precision(4);

oss << "HFD=" << hfd << endl

<< "FWHM H=" << fwhmHorz << endl

<< "FWHM V=" << fwhmVert << endl

<< "MAX=" << (int)maxPixValue << endl

<< "SAT=" << (saturated ? "Y" : "N");

rgbImg.draw_text(floor(factor * std::get<0>(subPos) + 0.5), floor(factor * std::get<1>(subPos) + 0.5), oss.str().c_str(), white /*fg color*/, black /*bg color*/, 0.7 /*opacity*/, 9 /*font-size*/);

}

rgbResized.save("out.jpeg");

return 0;

}

Related work

Recently I was contacted by Kim, an astrophotographer from the Netherlands. He took the star recognizer code and integrated it with his sky-view camera. I am publishing two images from the camera with his kind permission. Thanks @astroimagineer for sharing.

Last updated: June 9, 2022 at 23:44 pm

Hi Carsten, the test.fits file you offered can’t be read sucessfully that throws error after run program,can you give a correct one? Thanks

Hi John, you are right – the uploaded image was corrupted for some reason. I gzipped the file again and re-uploaded. Now it should work. You can find the new file here. Best regards

Hi Carsten,

I got it working. The key was “make shared” instead of just “make” for the cfitsio .

This made the extra libraries.

Thanks again.

Hi Carsten,

I’m making an autofocus routine, and I’m trying to compile and run your star_recognizer.cpp.

I’m using ubuntu 16.04.3 LTS.

I downloaded, configured, compiled, and installed the latest cfitsio.

I downloaded, configured, compiled, and installed the latest CCfits

If I use the command line you recommend,

g++ star_recognizer.cpp -o star_recognizer -lX11 -lpthread -lCCfits -lgsl -lgslcblas -std=c++0x

I get this error:

/usr/bin/ld: /tmp/ccexFUe5.o: undefined reference to symbol ‘ffgpv’

//usr/lib/x86_64-linux-gnu/libcfitsio.so.2: error adding symbols: DSO missing from command line

If I add this to the command line: -lcfitsio

Then it compiles. But when I run ./star_recognizer, I get this error

Opening file FOCUS011811.fit

ERROR: Mismatch in the CFITSIO_SONAME value in the fitsio.h include file

that was used to build the CFITSIO library, and the value in the include file

that was used when compiling the application program:

Version used to build the CFITSIO library = 2

Version included by the application program = 5

Fix this by recompiling and then relinking this application program

with the CFITSIO library.

Read FITS failed.

So, yes, I found old library files in /usr/lib/x86_64-linux-gnu/

libcfitsio.a

libcfitsio.so

libcfitsio.so.2

libcfitsio.so.2.3.37

But they’re all dated 2015-10-16.

So I replaced the libcfitsio.a with the new one.

Then I get a lot of other errors. It seems like the new version of cfitsio doesn’t make all the other library files, only the libcfitsio.a

Do you have any suggestions?

Thanks a million

Dan Gray

Hi Dan, I just saw your comment. Sorry for not answering earlier. Good to hear that you found a solution to the problem. In case you will have your autofocus code online, could you let me know the link? That would be great! Best regards, Carsten

Yes, I’ll put it online as soon as I’m done.

It’s working really well right now….

Dan

Hi Carsten

nice job

I eventually got the code to compile and run under Ubuntu 20.04 after quite a few problems which I have documented below

I also linked against libjpeg and this required the addition of a #define to the source

#define cimg_use_jpeg

default: star_recognizer

star_recognizer:

g++ star_recognizer.cpp -o star_recognizer -lX11 -lpthread -lCCfits -lgsl -lgslcblas -std=c++0x -ljpeg -L /usr/local/lib -L /usr/local/src/CCfits/ -L /usr/local/src/CCfits/.libs/

./star_recognizer test.fits

clean:

rm star_recognizer

The only remaining discrepancy is that your example on the page shows 41 recognised stars while on my machine 38 are found

Do you have any explanation for this discrepancy?

If you email me I can share the files with you

-David

Hi David, thanks for your kind message! I am happy to hear that the code was useful. I will have a closer look the next few days.

Hi Carsten,

Thank you for sharing all you learned on this topic! Clearly so much was put into this and your explanations are super helpful. The code built first try on Ubuntu 20.10, but could not write the jpeg. David’s comment helped there, although I did not have the library path problems. Simply adding -ljpeg and the #define did the trick.

I found all the required libraries via apt, in case this makes it easier for anyone else:

sudo apt install cimg-dev libccfits-dev libcfitsio-dev libgsl-dev libboost-dev libboost-program-options-dev

Awesome work, man!

–Rich

Hi Rich,

I am really happy to hear that the article and the code were helpful! Thanks for noting down the list of all required libraries.

Best regards and clear skies

Carsten

Is it needed to start from a stretched image or could this be in linear state?

Thanks.

Hi Massimiliano, thanks for your question. From my perspective it should also work with non-stretched images. However, my guess is that the results for a non-stretched vs a stretched image could vary because of the thresholder (depending on the stretch function used). I have not tried that.

Thanks I solved through an initial image stretch.

It works but the anisotropic blurring routine is very slow respect to the equivalent GREYCstoration process of Pixinsight.

It takes 1 min versus 1 sec in PI!

Any idea?